Keeping up with the robots - maintaining human agency in an automated world

The Pathways for Prosperity Commission on Technology and Inclusive Development attended the We Robot 2018 conference on robotics, law and policy at Stanford University. The meeting helped the commission to understand the latest technology, and to think about the approaches governments could take as they navigate rapid change.

Tebello Qhotsokoane, Research and Policy Officer at the commission, thinks about how technology is affecting human agency and the implications for governments in the global south as they navigate their conversations with private sector, citizens, civil society and other stakeholders.

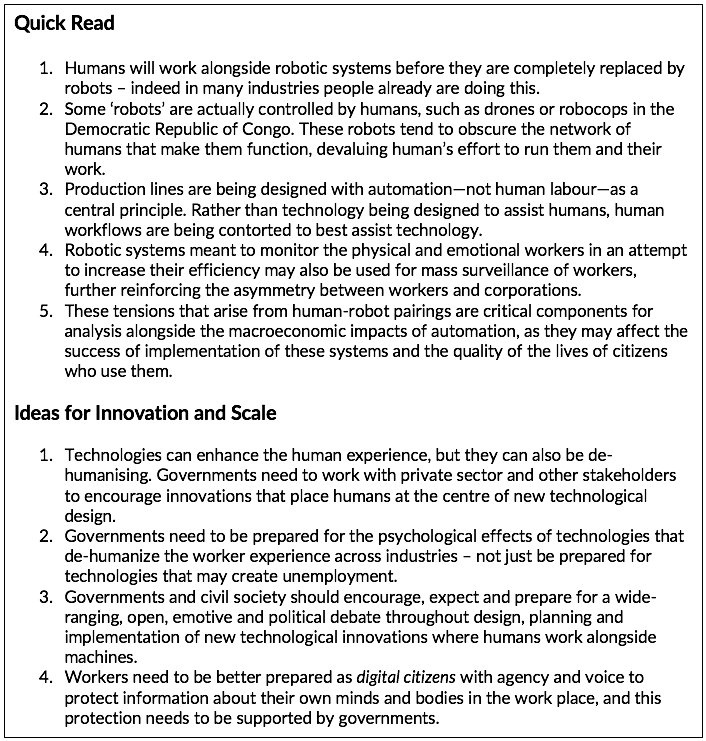

It is more likely that humans will work alongside machines long before they are completely replaced by them, yet much of the attention on technological disruption continues to centre on the existential hysteria about the prospect of a jobless future. There are myriad papers and news articles that detail the rise of the machine, often conceptualised as robots and devices stepping into roles previously occupied by humans, while humans become obsolete.

But the machines have already risen.

Humanoid robots like those on Hollywood blockbuster film I, Robot may not be running all industries as humans languidly contemplate their obsolescence, but robotic technologies have long been used in factories, manufacturing plants, and mines. These technologies are increasing efficiency and overall economic productivity, hence their wide adoption and use.

Less discussed, but equally worthy of inquiry is the influence that these high-tech solutions have on workers that operate alongside robots and autonomous systems. What impacts do these technologies have on workers’ identities, sense of agency or even the ways that knowledge is acquired and perceived?

At the We Robot 2018 conference, Madeleine Elish, researcher and cultural anthropologist from Data & Society, argued that robots disrupt workers’ agency in ways that negatively impact workers’ sense of self. In her ethnography of pilots of unmanned drones, she identifies that unmanned drones are only perceived as unmanned because they obscure the networks of human labour that are required to create and maintain them. These ‘autonomous’ systems rob drone pilots of agency, and in so doing diminish their stature in the eyes of colleagues, negatively impacting their own self-perception as a consequence. This ‘obscuring’ does not only have effects on abstract qualities like ‘identity’ and ‘self-worth’ but could also result in material consequences for drone pilots. Since drone pilots operate unmanned aircrafts from remote locations many miles from the front lines of battle, they are excluded from traditional awards associated with on-the-field combat. These awards are linked to career progression and in some instances, wages salaries and benefits, meaning that the inability to be seen as a real pilot affects their career outcomes.

These arguments on labour obscuring are not limited to drone pilots in the United States. Similar phenomena can be seen in the Democratic Republic of Congo, where the ‘robocops’ are used at busy traffic intersections in the city. These robocops are maintained by human labour whose contribution is not acknowledged or praised. The robocops themselves have become the object of the public’s adoration, with some outlets reporting that ‘people prefer them to human traffic police.’ When declines in congestion and traffic accidents are attributed to the robots, officers who monitor the footage collected from these robots experience the same loss of agency that drone pilots experience and may actually be excluded from bonuses associated with good service that traffic police on the ground have access to.

In Kinshasa, the success of the robocops, while lauded, has its limits. Human policemen can chase motorists who run red lights and raise civic awareness, while their robocop counterparts cannot. The popularity of the robocops has reinforced mistrust in human police officers who are seen as corruptible. For many traffic officers in Kinshasa, there is very little incentive to do their work well, not least because it is a poor paying job with high opportunities for low-level corruption. With the new robots threatening their social and even their human standing, this incentive to do good work has only been diminished further.

This loss of agency takes a different form on the factory floor. Taiwan-based large-scale laptop manufacturer, Quanta, has started decentring the factory worker and centring robots in their design processes - a design process called Designing for Automation – highlights Ling-Fei Lin of Harvard University, speaking at the We Robot 2018 conference. This new approach requires that R&D teams in hardware manufacturing “change their long-time experiences and design practices so that they could work well with their robotic ‘colleagues'", Ling-Fei Lin states.

Factory workers are tasked with accommodating the technology, not the other way around, meaning that workers are constantly engaged in a process of keeping up, relearning and readjusting. This induces stress to workers who have to complete tasks at the pace of robots that operate with relatively stable performance and no fatigue. The problem of stress is further exacerbated by the fact that robots make for stoic work companions, meaning that some workers do not even have the comfort of a chatty co-worker. This may not only leave them feeling inadequate, but they also feel perhaps the clock ticking on the value of their own labour, their work, and even consequently their social position.

Beyond the loss of agency, humans working with intelligent machines also have to worry about the surveillance and normalisation of intrusive devices. In the draft of her book Data Driven: Truckers and the New Workplace Surveillance, Karen Levy, a sociologist and legal scholar from Cornell University posits that truck drivers might have to worry about big brother before self-driving trucks take their jobs. Levy presents an analysis of the usage of intelligent systems to aid truckers in their work, some of which are already in use, and describes the pernicious effects of these assistive technologies on workers. Technology that is meant to monitor drowsiness could amount to a surveillance device used against the truck driver, for example. According to Levy’s research, the integration of sensing and monitoring systems into the truck, as well as the technology embedded in the vests and hats of truck drivers, could be a nuisance for the truckers in particular. The population of truckers is stereotyped as a self-selecting collection of ‘macho’ men who revel in the idea of being alone on the open road. Introducing surveillance into this industry is in direct opposition with that identity and could, and has already, resulted in great resistance from truckers who are forced or strongly encouraged to use the technology.

The threat of surveillance is not unique to the trucking industry. In China, state grid Zhejiang Electric Power, a government run company, uses Neurocaps, a technology that places wireless sensors in employees’ caps or hats which, combined with Artificial Intelligence algorithms, spots incidents of workplace rage, anxiety or sadness. This technology is meant to help managers monitor workflow patterns and anticipate fatigue, allowing them to pre-emptively ask workers to take breaks, increasing productivity. Good intentions notwithstanding, information gathered by these systems could also be used to unfairly dismiss or retire workers. There are reports from Chinese news outlets that workers in these factories, like their robo-trucker counterparts, resisted the technology due to discomfort and feelings of intrusion. But in these factories the power asymmetry between factory workers and management means that workers have to tacitly accept the usage of Neurocaps, giving these companies unprecedented access to personal mental health data on workers.

The South China Morning Post quotes Qiao Zhian, a professor of management at a university in Beijing who says “lawmakers should act now to limit the use of emotional surveillance and give workers more bargaining power to protect their interests.” His conclusion is clear and resolute – “the human mind should not be exploited for profit.”

The discussion on the impact of technology on humans seems a useful complement to the well documented conversation about the impacts of technology on employment statistics, global value chains, and economic growth. When we discuss economic forces at play in automation, it is easy to forget that people exist behind the numbers and colourful charts. Questions of identity, agency, privacy and knowledge creation are critical considerations in the exploration of the role that technology has and could play in society.

Indeed, understanding these new relationships between man and machine could inform the ways that systems are deployed to achieve the maximum benefits to society. In this research, as in all research, it is important that these questions and studies are not only carried out in developed countries, but that they are also done in the developing countries where nascent governance institutions and often weaker labour regulations mean that the impact of surveillance, labour augmentation and psychological impacts on workers may be magnified.